# 5 Million WebSockets

Date - May 20, 2019

Oatpp version - 0.19.4

This article describes oatpp benchmark for 5 Million fully-loaded concurrent websocket connections.

It consists of two parts:

# The Purpose

This benchmark is aimed to determine scalability of oatpp with increase of load and computing powers with respect to previous 2-million WebSockets benchmark.

| Previous, 2M benchmark | This, 4M / 5M benchmark | |

|---|---|---|

| Computing power | 8 vCPUs, 52 GB memory | 16 vCPUs, 104 GB memory |

| Load | 2M connections | 4M / 5M connections |

# 4M WebSockets

# Setup

- Server Machine - Google-Cloud n1-highmem-16 (16 vCPUs, 104 GB memory) running Debian GNU/Linux 9.

- Client Machine - Google-Cloud n1-highmem-16 (16 vCPUs, 104 GB memory) running Debian GNU/Linux 9.

Server application listens to 400 ports from 8000 to 8399

(in order to prevent ephemeral ports exhaustion on the client - as we running all 4M clients on the same machine).

Once there is a message on WebSocket, server will echo client's message adding "Hello from oatpp!" at the beginning.

Client application opens 10k connections on each port, waits all connections are ready (all WebSocket handshakes are done) then starts the load. Each of 2-million websocket clients continuously sends messages to server. Once message is sent client sends another one.

Both server and client applications are running asynchronous oatpp server/client based on oatpp coroutines.

# Results

Server showed stable performance through all the benchmark test delivering about 17 Million messages per minute (~57.5 Mb/Second):

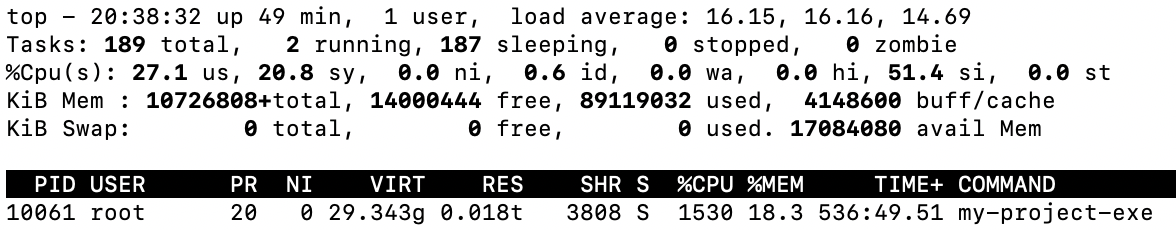

# Server Stats

# Resource consumption

Server memory consumption was stable at about 30GB.

# Throughput

SOCKETS: 4000000 # - Number of connected clients

FRAMES_TOTAL: 573911830 # - Frames received by server (total)

MESSAGES_TOTAL: 573905877 # - Messages received by server (total)

FRAMES_PER_MIN: 17373801.439247 # - Frames received rate per minute

MESSAGES_PER_MIN: 17372968.482111 # - Messages received rate per minute

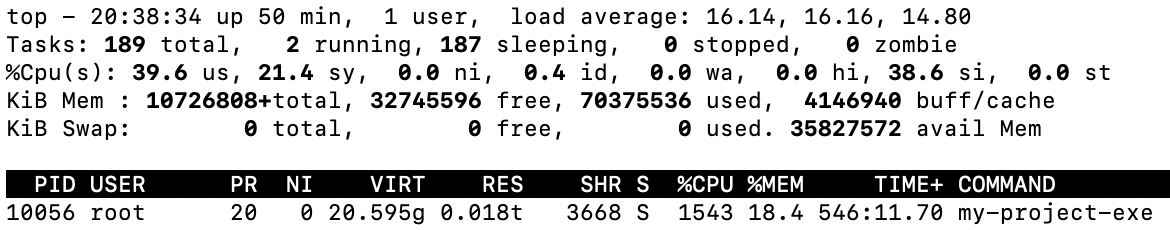

# Client Stats

# Resource consumption

Client memory consumption was stable at about 20.5GB.

# Throughput

SOCKETS: 4000000 # - Number of connected clients

FRAMES_TOTAL: 516770460 # - Frames received by client (total)

MESSAGES_TOTAL: 516405193 # - Messages received by client (total)

FRAMES_PER_MIN: 16801610.114129 # - Frames received rate per minute

MESSAGES_PER_MIN: 16472169.881512 # - Messages received rate per minute

# 5M WebSockets

# Setup

- Server Machine - Google-Cloud n1-highmem-16 (16 vCPUs, 104 GB memory) running Debian GNU/Linux 9.

- Client Machine - Google-Cloud n1-highmem-16 (16 vCPUs, 104 GB memory) running Debian GNU/Linux 9.

Server application listens to 500 ports from 8000 to 8499

(in order to prevent ephemeral ports exhaustion on the client - as we running all 5m clients on the same machine).

Once there is a message on WebSocket, server will echo client's message adding "Hello from oatpp!" at the beginning.

Client application opens 10k connections on each port, waits all connections are ready (all WebSocket handshakes are done) then starts the load. Each of 2-million websocket clients continuously sends messages to server. Once message is sent client sends another one.

Both server and client applications are running asynchronous oatpp server/client based on oatpp coroutines.

As main point of memory consumption is linux sockets buffers, for 5M connections it was required to reduce net.ipv4.tcp_rmem in

order for the test to be stable

sysctl -w net.ipv4.tcp_rmem='2048 2048 2048'

Here we reduce read buffers as it appeared to have minimal performance impact in this particular case.

# Results

Server showed stable performance through all the benchmark test delivering about 18 Million messages per minute (~58 Mb/Second):

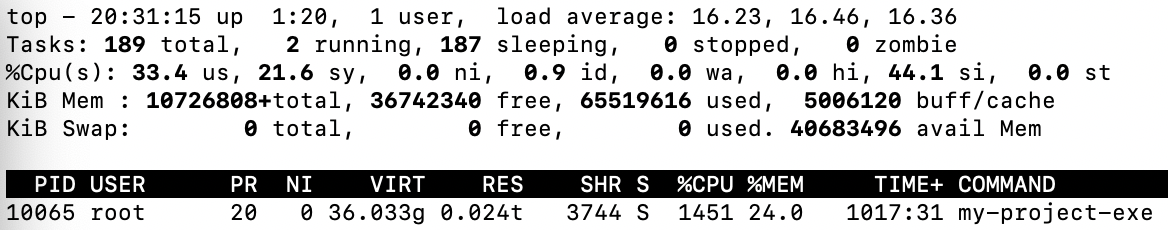

# Server Stats

# Resource consumption

Server memory consumption was stable at about 36GB.

# Throughput

SOCKETS: 5000000 # - Number of connected clients

FRAMES_TOTAL: 1179521220 # - Frames received by server (total)

MESSAGES_TOTAL: 1177610133 # - Messages received by server (total)

FRAMES_PER_MIN: 19625257.718400 # - Frames received rate per minute

MESSAGES_PER_MIN: 19619426.046304 # - Messages received rate per minute

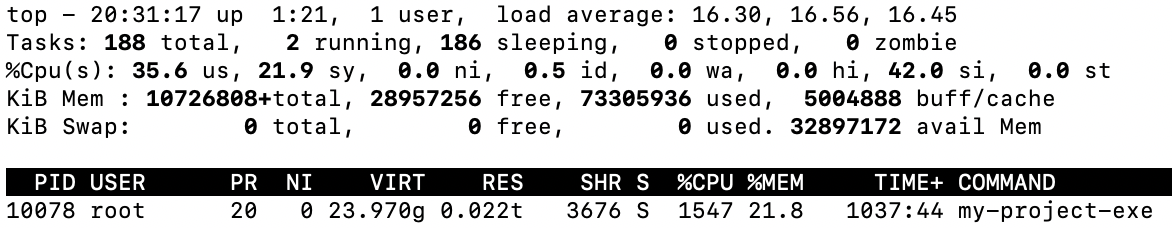

# Client Stats

# Resource consumption

Client memory consumption was stable at about 24GB.

# Throughput

SOCKETS: 5000000 # - Number of connected clients

FRAMES_TOTAL: 1108906831 # - Frames received by client (total)

MESSAGES_TOTAL: 1097120434 # - Messages received by client (total)

FRAMES_PER_MIN: 17878571.176088 # - Frames received rate per minute

MESSAGES_PER_MIN: 17612701.369327 # - Messages received rate per minute

# Steps to Reproduce

Create two n1-highmem-16 (16 vCPUs, 104 GB memory) - Debian GNU/Linux 9 instances in same VPC on Google Cloud.

# Execute the following commands for both instances (SSH).

- Install git

$ sudo su

$ apt-get update

...

$ apt-get install -y git

...

- Clone benchmark-websocket repo and

cdto repo folder

$ git clone https://github.com/oatpp/benchmark-websocket

...

$ cd benchmark-websocket

- Install

oatppandoatpp-websocketmodules (run ./prepare.sh script).

$ ./prepare.sh

- Configure environment (run ./sock-config-5m.sh script)

$ ./sock-config.sh

$ ulimit -n 6000000

# Build and Run Server

Commands for server instance only:

- Build server

$ cd server/build/

$ cmake ..

$ make

- Run server

$ ./wsb-server-exe --tp 16 --tio 8 --pc 500

where:

--tp - number of data-processing threads.

--tio - number of I/O workers.

--pc - number of ports to listen to.

# Build and Run Client

Commands for client instance only:

- Build client

$ cd client/build/

$ cmake ..

$ make

- Run client

$ ./wsb-client-exe --tp 16 --tio 8 -h <server-private-ip> --socks-max 5000000 --socks-port 10000 --si 1000 --sf 30 --pc 500

where:

--tp - number of data-processing threads.

--tio - number of I/O workers.

-h <server-private-ip> - substitute private-ip of server instance here.

--socks-max - how many client connections to establish.

--socks-port - how many client connections per port.

--si 1000 --sf 30 - control how fast clients will connect to server. Here - each 1000 iterations sleep for 30 milliseconds.

--pc - number of available server ports to connect to.

Note - clients will not start load until all clients are connected.

Note - client app will fail with assertion if any of clients has failed.

# Conclusion

Previous results for 2M WebSockets were 9 Million messages per minute ~32.7 Mb/Second. So it was expected to get something aroud 18 Million messages per minute ~64 Mb/Second in this benchmark (As computing power was increased x2).

Actual results are 17-18 Million messages per minute, with about ~58 Mb/Second - which is a good result, almost as expected.

At this point oatpp has shown almost constant I/O performance with respect load increase.